pointy poisson¶

What does this task do?¶

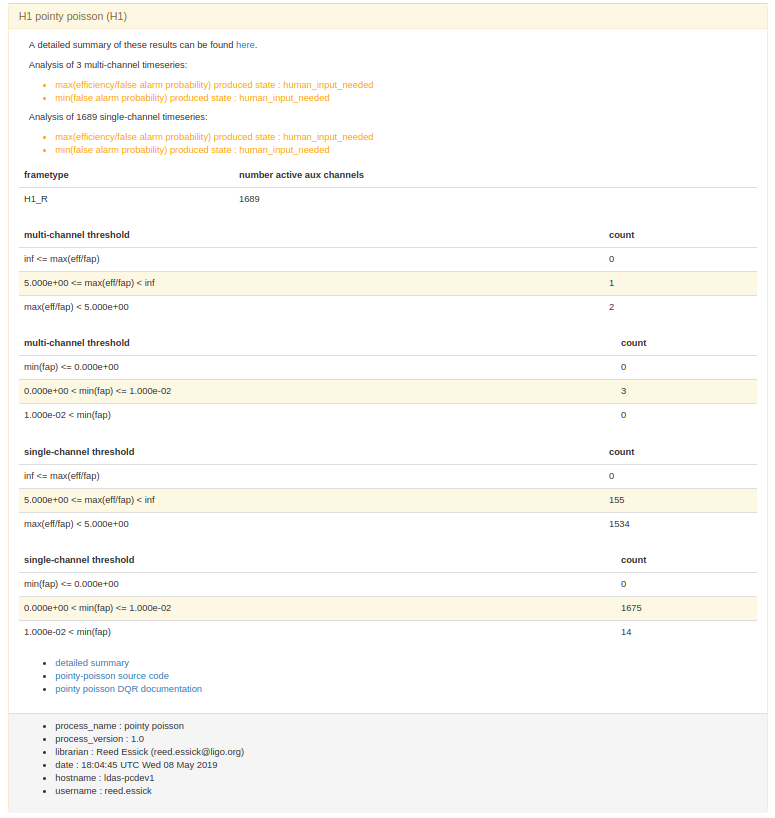

This task runs an end-to-end search for coincident auxiliary triggers based on the hyperlink: pointy-poisson statistic, presenting a list of single-channel and multi-channel veto conditions that exceed a set of thresholds. The basic idea is to perform a null-test of the hypothesis that each auxiliary channel is a poisson process producing triggers. By estimating the probability that an auxiliary trigger is as close or closer to a particular time (chosen based on h(t)), we can determine whether that auxiliary channel is “suspiciously coincident” with target times.

This is done by finding which auxiliary channel triggers are coincident with some target window (eg, the template duration) and then estimating the rate of triggers as a function of their SNR in each active auxiliary channel over a much wider window (typically +/-1000 sec padded around the on-source window). The wider window also provides an estimate of the behavior of each channel, ie, cumulative distribution functions of both the glitch detection efficiency (using glitches identified in the target channel above an SNR threshold) and the false alarm probability (estimated as the fraction of time spent beyond some threshold). Please note, this means the background estimation includes the on-source window; it is expected that the background window will be wide enough that the inclusion of the on-source window will not significantly bias the conclusions drawn within the on-source window.

In addition to analyzing the single-channel timeseries, it combines triggers from auxiliary channels coincident with the on-source window into a few multi-channel veto conditions.

lognaivebayes: treat the pointy-statistic for each channel as a p-value and consider all channels to be independent. The joint p-value in this naive-bayes approximation is the product of the individual p-values.

logmin: take the minimum p-value over all channels instead of multiplying the p-values.

logPOST: estimate a likelihood ratio using cumulative distributions (efficiencies and deadtimes measured using target times contained within the wide rate_estimation_window) and map this into the probability that there is no glitch assuming equal priors \(logPOST = -log(1+\Lambda)\) where \(\Lambda=\) efficiency/deadtime.

States are computed for each single-channel veto condition and for the multi-channel veto conditions separately, and then combined using the default DQR state logic to produce an overall summary state. For each timeseries, several criteria are used to determine whether that timeseries should veto the candidate

max(efficiency/false alarm probability): using the efficiency and FAP estimates from the wider rate estimate window, this threshold looks for timeseries values within the target window that correspond to efficiency/false alarm probability above some threshold. The idea is that large ratios will correspond to “confident” veto conditions, and therefore we look for such conditions within the target window.

min(false alarm probability): using the FAP estimates from the wider rate estimate window, this threshold looks for timeseries values within the target window that are particularly rare. The idea is that rare things happening within the target window may be reason to veto the event, although, unlike max(eff/fap), this does not check whether the rare thing actually couples to h(t).

Pointy poisson will also include a link to more detailed HTML summary pages. These present high-level summaries of the criteria mentioned above as well as individual summary pages for each single-channel and multi-channel timeseries, showing exactly what the timeseries did during the target window, an estimate of the associated ROC curve from the wider rate estimate window, and an enumeration of timeseries minima and the corresponding target times (if any).

What are its return states?¶

pass

human_input_needed

fail

error

How was it reviewed?¶

This has not been reviewed!

How should results be interpreted?¶

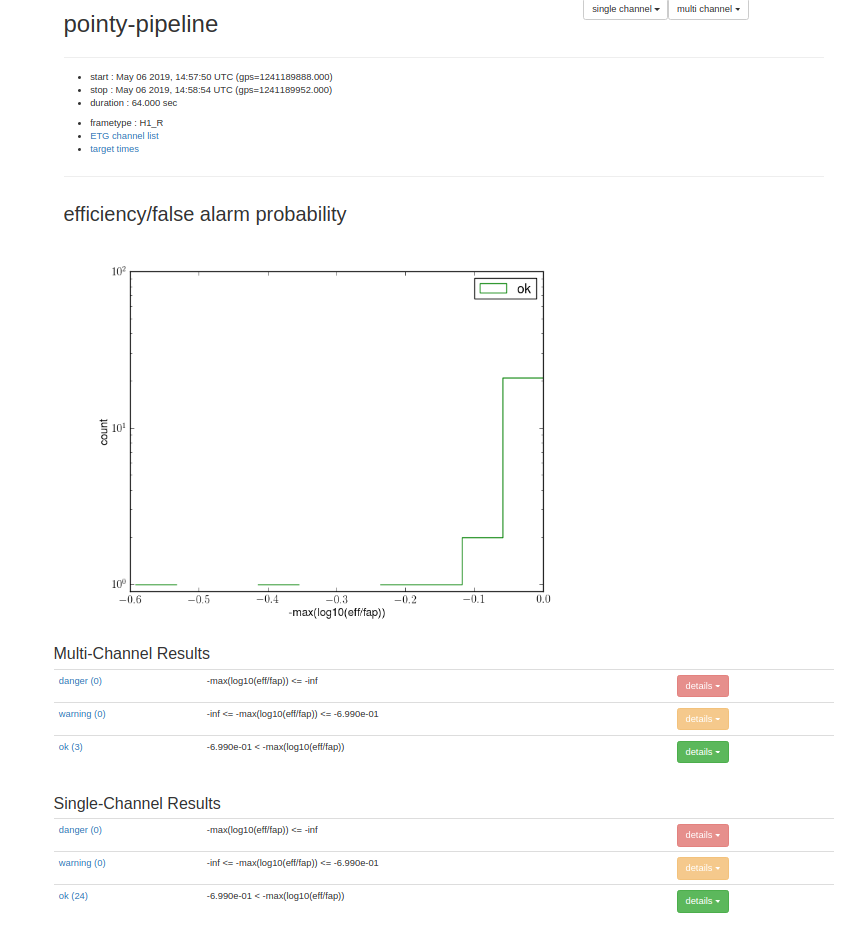

Analysts need first look at the summaries for separate criteria. These are presented within the DQR HTML interface:

Each summary divides the associated channels into 3 sets

danger: causes the task to return state=fail. Please note, this may not be reason to reject the candidate as it may be cleanly witnessed by, e.g., 2 out of 3 detectors.

warning: merits follow-up (human_input_neede)

ok: should not be considered reason to reject the event (pass)

It is worth noting that logPOST is expected to be as sensitive or more sensitive than any other timeseries, although this has not been definitively shown to be the case. Therefore, analysist should look at all criteria for both single- and multi-channel timeseries.

If there are any timeseries that warrant follow-up, analysts should chase the link to the detailed summary pages presented both in the top-level summary and in the list of links at the bottom of the panel. The top-level detailed summary includes much of the same information as the DQR HTML interface, but also has histograms showing the distribution of timeseries’ performance for each criteria and sorted links to single-timeseries summary pages. Again, these are divided into multi- and single-channel sets for convenience.

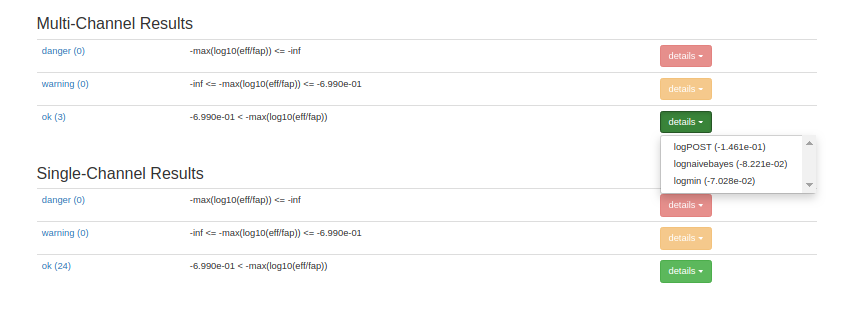

Analysts can easily access sorted lists of single-timeseries pages via the drop-down menus

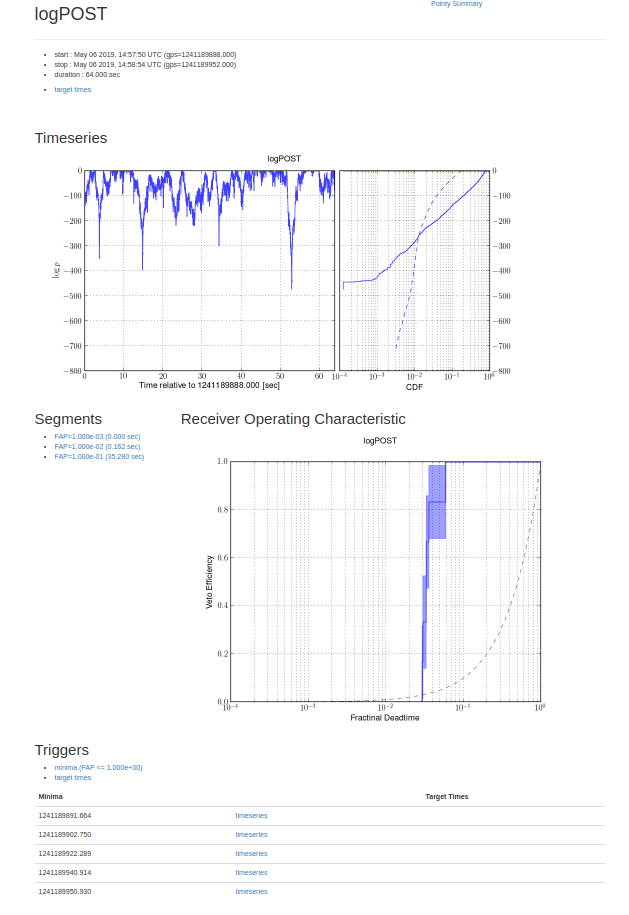

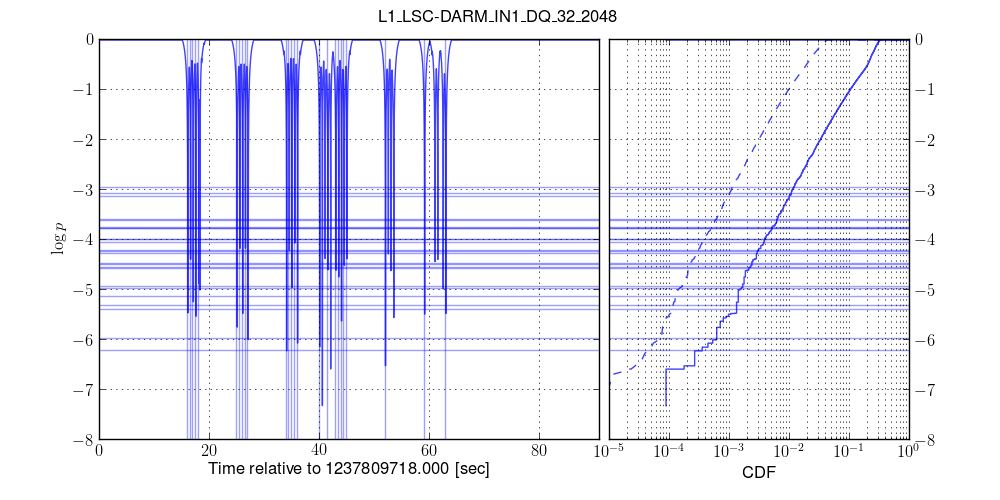

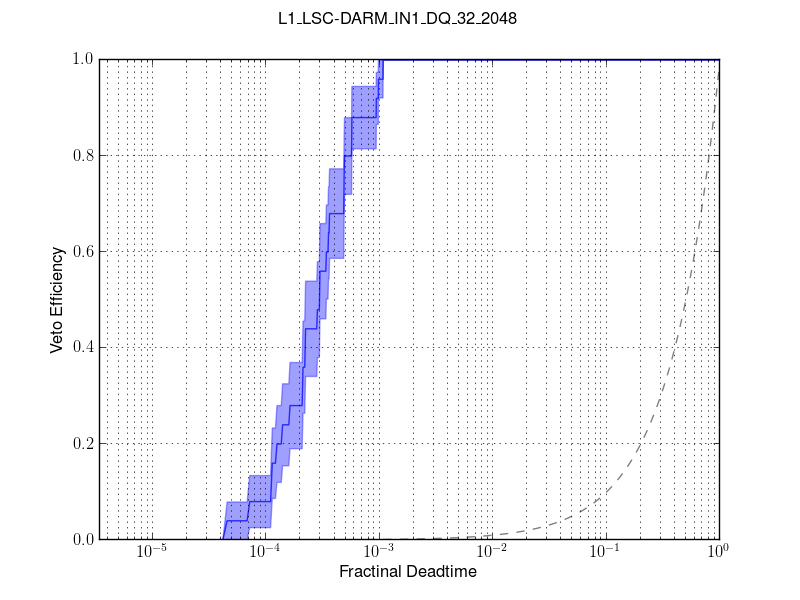

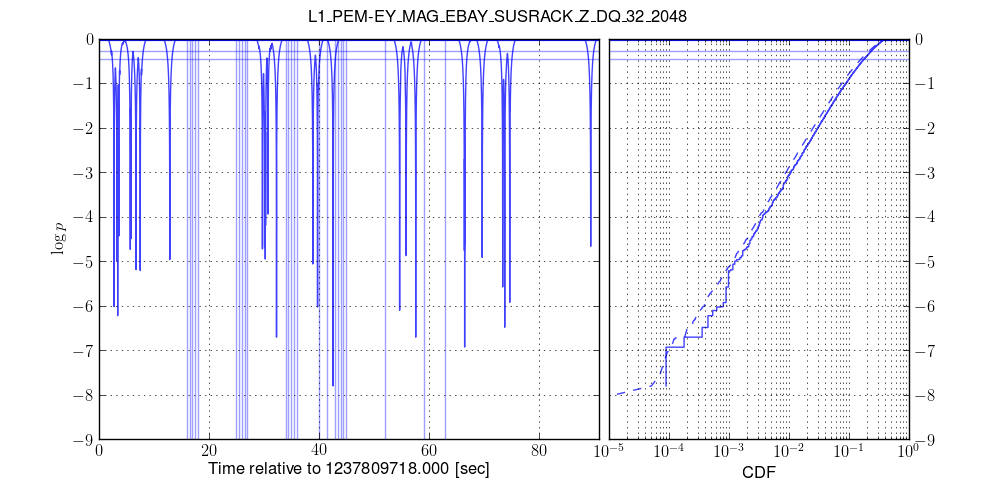

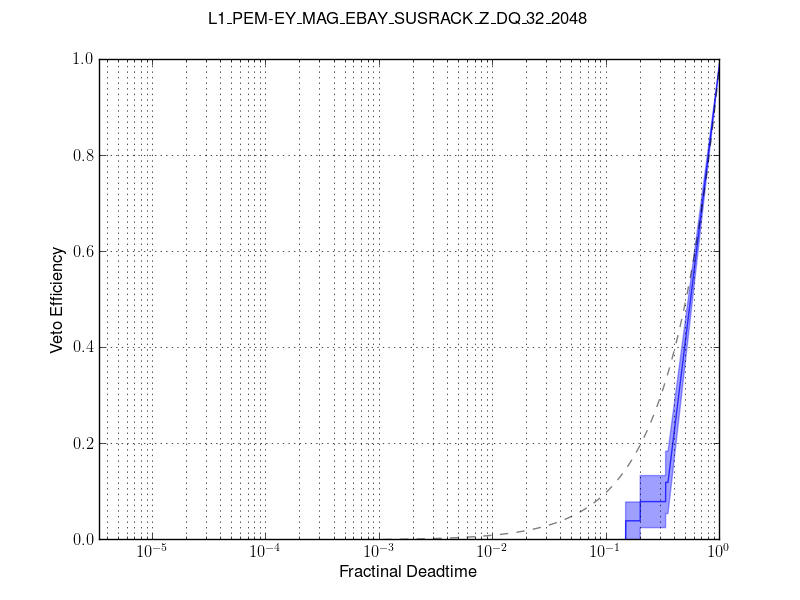

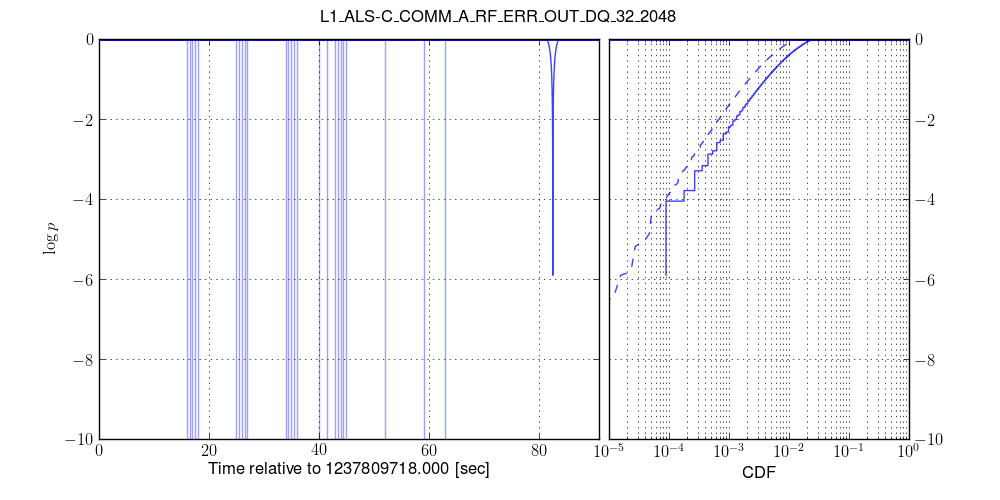

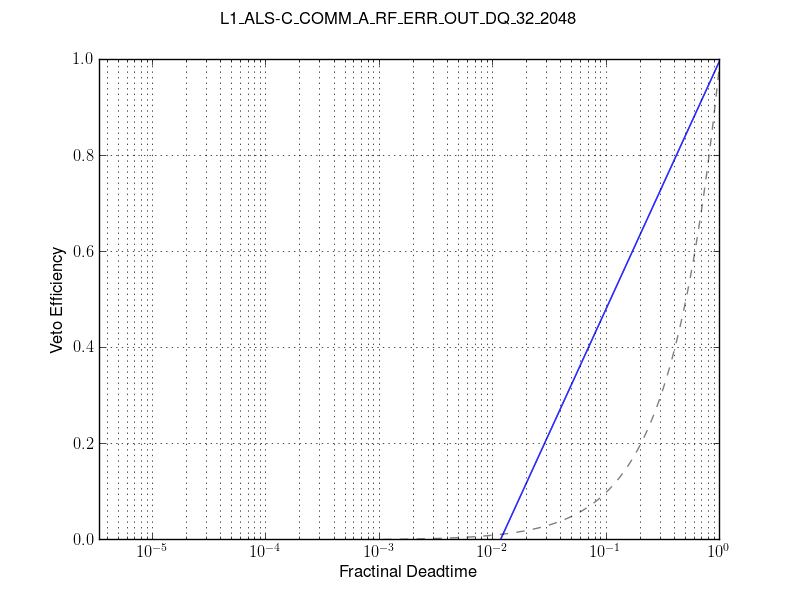

On each single-timeseries summary page, analysts can examine

the behavior of the timeseries within the target window

the ROC curve estimated using the entire rate estimate window

lists of segments within the target window corresponding to several different FAP thresholds

lists of minima within the target window and any associated target times

depending on how the single-timeseries pages were generated, these may or may not include links to zoomed timeseries surrounding the minima and/or target times as well as Q-scans

These are visible in the following example logPOST page

For the single-timeseries that warrant follow-up, analysts need look for clear dips in the timeseries, which correspond to confident veto conditions. Following-up these dips, via the enumerated list of zoomed timeseries+Q-scans or otherwise, should allow users to determine whether the veto condition flagged interesting noise in h(t) and, if so, whether that noise could have caused the candidate.

Below, we show a single-timeseries timeseries and ROC curves for clearly witnessed noise (specifically, hardware injections witnessed by an unsafe channel: L1_LSC-DARM_IN1_DQ_32_2048)

If analysts observe behavior like this “in the wild,” it is strong evidence for terrestrial noise in the detector during the time of interest. This does not mean the candidate need be rejected, however. Extreme care is needed when assessing whether the witnessed terrestrial noise is actually responsible for the candidate rather than a coincident astrophysical signal.

Below, we show a single-timeseries the same plots for the same hardware injections but for a channel that does not witness these well even though it is very active (L1_PEM-EY_MAG_EBAY_SUSRACK_Z_DQ_32_2048).

And here is one for a channel that is particularly quiet

If analysts observe behavior like either of these, then there is no evidence for terrestrial noise witnessed by auxiliary channels within the time of interest.

What INI options, config files are required?¶

frametype (string)

the frametype to use when generating triggers.

this is automatically set to H1_R for H1 pointy poisson and similarly for L1 pointy poisson.

target_channel (string)

the KW channel name to use when defining target times (glitches) for efficiency estimation once pointy-poisson logpvalue timeseries are obtained

this is automatically set to H1_CAL-DELTAL_EXTERNAL_DQ_32_2048 for H1 pointy poisson and similarly for L1 pointy poisson.

unsafe_chanlist_path (string)

the path to a list of unsafe channels with each row formatted as channel samplerate

default values are used for derived tasks

unsafe_channels_path (string)

the path to a list of unsafe KW channel names with each row containing a single channel

default values are used for derived tasks

chanlist_path (string, optional)

the path to a list of channels to be used in the analysis with each row formatted as channel samplerate

pointy poisson will determine this dynamically based on frametype unless this is specified

thresholds (space delimited list of floats, optional)

the set of coincidence thresholds used when extremizing the pointy-poisson statistic (compute the statistic using aux triggers above each of these threholds and then return the mimimum)

it not specified, pointy poisson will use all significances present in the set of triggers for each channel, which is computationally expensive.

coinc_window (float, optional)

the window used to determine which auxiliary channels are “active” around the time of interest. Padded symmetrically so that we check within [start-coinc_window, end+coinc_window) to determine for which aux channels we need to generate wider triggers sets for rate estimation.

rate_estimation_left_window (float, optional)

the padding before start used to define trigger production for rate estimation

rate_estimation_right_window (float, optional)

the padding afeter end used to define trigger production for rate estimation

max-eff-fap-danger (float, optional)

EFF/FAP at or above this threshold are returned as “fail”. Otherwise we report “pass”

max-eff-fap-warning (float, optional)

EFF/FAP at or above this threshold are returned as “human_input_needed”. Otherwise we report “pass”

minfap-danger (float, optional)

FAP at or below this threshold are returned as “fail”. Otherwise we report “pass”

minfap-warning (float, optional)

FAP at or below this threshold are returned as “human_input_needed”. Otherwise we report “pass”

Are there any derived tasks that are based on this one?¶

The following reference standard frametypes and unsafe chanlists and channels stored within the DQR source code.

H1 pointy poisson

L1 pointy poisson